Coffee Space

Coffee Space

I recently read an article by Mark McNally, where he discusses running a website that can theoretically handle 4.2 million requests per day for just £4 per month. He achieves this with 1 CPU and 2GB of RAM. This of course got him to the front page of HackerNews and all the attention that brings with it 1.

The tool he introduces for testing, ApacheBench is new to me, but also pre-installed on my machine already - awesome! To run a test, you can run:

0001 ab -n <NUM_TESTS> -c <CONCURRENT_TESTS> <DOMAIN>

There are of course caveats in the tests that Mark performs, some of which are also addressed and some that are now:

That all said, it beats zero testing! And it at least allows us to compare changes to the website in some meaningful way, even if it means we cannot accurately guess how much traffic we can handle - we can at least say with reasonable confidence it is more or less than some previous state.

Instead of testing some selected web pages, I want to test all the different web pages automatically. I therefore wrote a script for automating the data collection:

0002 #!/bin/bash

0003

0004 DOMAIN="https://coffeespace.org.uk/"

0005 RESULTS="perf-$(date +"%Y-%m-%d_%H-%M-%S").csv"

0006

0007 # main()

0008 #

0009 # The main entry function.

0010 #

0011 # @param $@ The command line parameters.

0012 main() {

0013 # Loop over the data

0014 for f in www/*.html www/**/*.html www/**/**/*.html; do

0015 echo "url -> $DOMAIN$f"

0016 ab -n 1000 -c 100 -e temp.csv "$DOMAIN$f"

0017 echo "$(tail -n +2 temp.csv)" >> $RESULTS

0018 rm temp.csv

0019 done

0020 }

0021

0022 main $@

Note that I chose the same values for number of tests and number of concurrent connections as Mark did. Checking my network usage, I see about 650kB/s down and 150kB/s up.

Next I import the results into LibreOffice and generate some graphs…

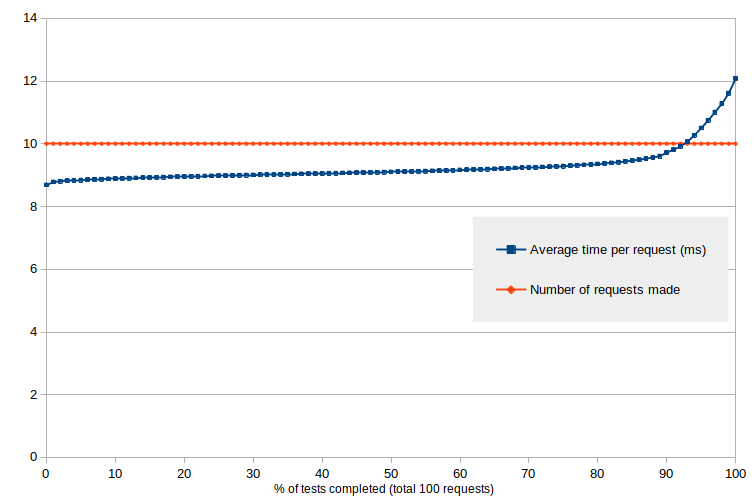

A total of 211k tests were performed against the server against all

.html pages in the statically generated part. Most pages

were delivered in about 9ms. If we round this up to 12ms, the server

could serve in excess of 80 pages per second, or about 7 million

pages per day!

As I mentioned previously - this server has just 128MB of RAM for $1.50 per month. Suck it Mark!

But if we look at the data, things are not quite so simple. As we keep hitting the server, the time to return a web page actually increases 2. It’s likely that resources are being consumed on the server (such as RAM) and it is struggling to keep up 3.

It’s good to know that the server could handle about 100 requests per second and still stand up though.

Currently the testing is not so ideal. There are a bunch of variables I cannot currently account for, such as:

Ideally we need better tools for more consistent tests, but

ab is surely better than nothing.

Perhaps in the future I could look at implementing disk caching of some kind, where content is stored in a RAM disk (on a better server). Currently caching would not really speed up the statically served content.

I could also look to minify the resources, such as the CSS and JS. Currently they are entirely human readable.

One thing I would like to look towards is providing a future-proof Data URL that encodes the meat of the web page as a link. This would be instantly loadable and always available offline. There is a generally accepted length limit of 65k bytes, which could be overcome by putting the text through compression, such as a ZIP file.

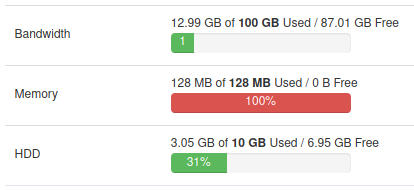

Note to self: I need to stop performing tests, I’ve already used 11% (13GB) of the servers 100GB monthly bandwidth - ouch!